AI in Cyber Security

Over the last 18 months, discussions about artificial intelligence (AI) – specifically generative AI – ranged from excitement and optimism about its transformative potential to fear and uncertainty about the new risks it introduces.

New research1 commissioned by Darktrace shows that 89 percent of IT security teams polled globally believe AI-augmented cyber threats will have a significant impact on their organization within the next two years, yet 60 percent believe they are currently unprepared to defend against these attacks. Their concerns include increased volume and sophistication of malware that targets known vulnerabilities and increased exposure of sensitive or proprietary information from using generative AI tools.

At Darktrace, we monitor trends across our global customer base to understand how the challenges facing security teams are evolving alongside industry advancements in AI. We’ve observed that AI, automation, and cybercrime-as-a-service have increased the speed, sophistication and efficacy of cyber security attacks.

How AI Impacts Phishing Attempts

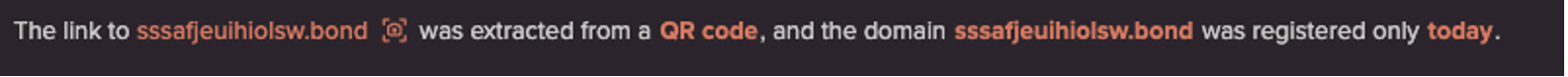

Darktrace has observed immediate impacts on phishing, which remains one of the most common forms of attack. In April 2023, Darktrace shared research that found a 135 percent increase in ‘novel social engineering attacks’ in the first two months of 2023, corresponding with the widespread adoption of ChatGPT2. These phishing attacks showed a strong linguistic deviation – semantically and syntactically – compared to other phishing emails, which suggested to us that generative AI is providing an avenue for threat actors to craft sophisticated and targeted attacks at speed and scale. A year later, we’ve seen this trend continue. Darktrace customers received approximately 2,867,000 phishing emails in December 2023 alone, a 14 percent increase on what was observed months prior in September3. Between September and December 2023, phishing attacks that used novel social engineering techniques grew by 35 percent on average across the Darktrace customer base4.

These observations reinforce trends that others in the industry have shared. For example, Microsoft and OpenAI recently published research on tactics, techniques, and procedures (TTPs) augmented by large language models (LLMs) that they have observed nation-state threat actors using. That includes using LLMs to draft and generate social engineering attacks, inform reconnaissance, assist with vulnerability research and more.

The Rise of Cybercrime-as-as-a-Service

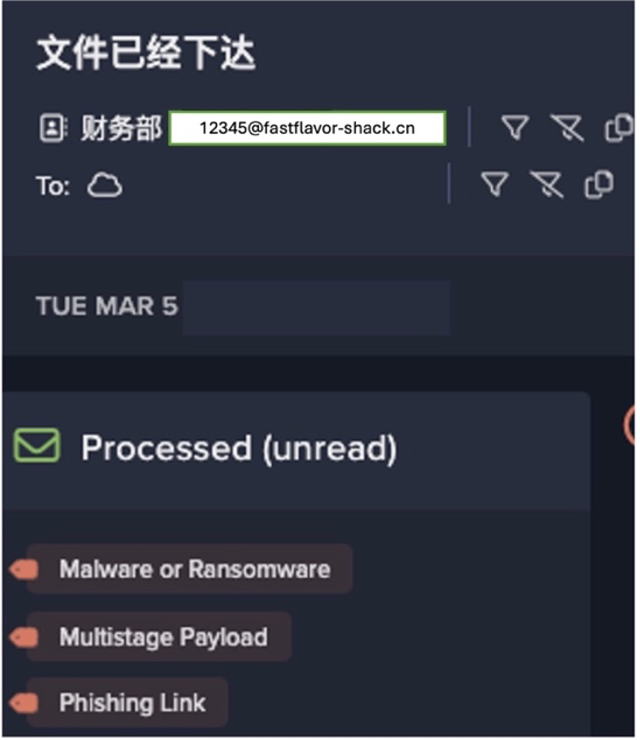

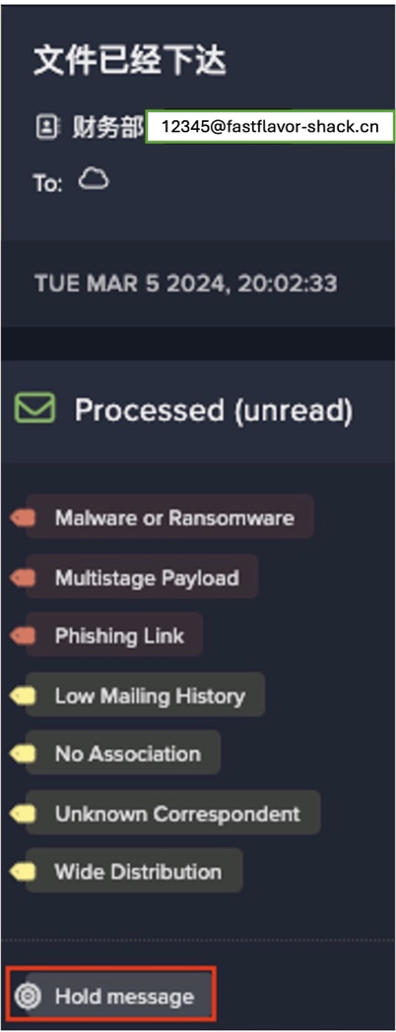

The increasing cyber challenge facing defenders cannot be attributed to AI alone. The rise of cybercrime as-a-service is also changing the dynamic. Darktrace’s 2023 End of Year Threat Report found that cybercrime-as-a-service continue to dominate the threat landscape, with malware-as-a-Service (MaaS) and ransomware-as-a-Service (RaaS) tools making up most malicious tools in use by attackers. The as-a-Service ecosystem can provide attackers with everything from pre-made malware to templates for phishing emails, payment processing systems and even helplines to enable bad actors to mount attacks with limited technical knowledge.

These trends make it clear that attackers now have a more widely accessible toolbox that reduces their barriers.

AI Enabling Accidental Insider Threats

However, the new risks facing businesses aren’t from external threat actors alone. Use of generative AI tools within the enterprise introduces a new category of accidental insider threats. Employees using generative AI tools now have easier access to more organizational data than ever before. Even the most well-intentioned employee could unintentionally leak or access restricted, sensitive data via these tools. In the second half of 2023, we observed that approximately half of Darktrace customers had employees accessing generative AI services. As this continues to increase, organizations need policies in place to guide the use cases for generative AI tools as well as strong data governance and the ability to enforce these policies to minimize risk.

It is inevitable that AI will increase the risks and threats facing an organization, but this is not an unsolvable challenge from a defensive perspective. While advancements in generative AI may be worsening issues like novel social engineering and creating new types of accidental insider threats, AI itself offers a strong defense.

The Shift to Proactive Cyber Readiness

According to the World Economic Forum’s Global Cybersecurity Outlook 2024, the number of organizations that “maintain minimum viable cyber resilience is down 30 percent compared to 2023”, and “while large organizations have demonstrated gains in cyber resilience, small and medium-sized companies showed significant decline.” The importance of cyber resilience cannot be understated in the face of today’s increasingly as-a-service, automated, and AI-augmented threat landscape.

Historically, organizations wait for incidents to happen and rely on known attack data for threat detection and response, making it nearly impossible to identify never-before-seen threats. The traditional security stack has also relied heavily on point solutions focused on protecting different pieces of the digital environment, with individual tools for endpoint, email, network, on-premises data centers, SaaS applications, cloud, OT and beyond. These point solutions fail to correlate disparate incidents to form a complete picture of an orchestrated attack. Even with the addition of tools that can stitch together events from across the enterprise, they are in a reactive state that focuses heavily on threat detection and response.

Organizations need to evolve from a reactive posture to a stance of proactive cyber readiness. To do so, they need an approach that proactively identifies internal and external vulnerabilities, identifies gaps in security policy and process before an attack occurs, breaks down silos to investigate all threats (known and unknown) during an attack, and uplifts the human analyst beyond menial tasks to incident validation and recovery after an attack.

AI can help break down silos within the SOC and provide a more proactive approach to scale up and augment defenders. It provides richer context when it is fed information from multiple systems, data sets, and tools within the stack and can build an in-depth, real-time behavioural understanding of a business that humans alone cannot.

Lessons From AI in the SOC

At Darktrace, we’ve been applying AI to the challenge of cyber security for more than ten years, and we know that proactive cyber readiness requires the right mix of people, process, and technology.

When the right AI is applied responsibly to the right cyber security challenge, the impact on both the human security team and the business is profound.

AI can bring machine speed and scale to some of the most time-intensive, error-prone, and psychologically draining components of cyber security, helping humans focus on the value-added work that only they can provide. Incident response and continuous monitoring are two areas where AI has already been proven to effectively augment defenders. For example, a civil engineering company used Darktrace’s AI to uplift its SOC team from the repetitive, manual tasks of analyzing and responding to email incidents. The analysts estimated they were each spending 10 hours per week on email incident analysis. With AI autonomously analyzing and responding to email incidents, the analysts could gain approximately 20 percent of their time back to focus on proactive cyber security measures

An effective human-AI partnership is key to proactive cyber readiness and can directly benefit the work-life of defenders. It can help to reduce burnout, support data-driven decision-making, and reduce the reliance on hard-to-find, specialized talent that has created a skills shortage in cyber security for many years. Most importantly, AI can free up team members to focus on more meaningful tasks, such as compliance initiatives, user education, and sophisticated threat hunting.

Advancements in AI are happening at a rapid pace. As we’ve already observed, attackers will be watching these developments and looking for ways to use it to their advantage. Luckily, AI has already proved to be an asset for defenders, and embracing a proactive approach to cyber resilience can help organizations increase their readiness for this next phase. Prioritizing cyber security will be an enabler of innovation and progress as AI development continues.

--

Join Darktrace on 9 April for a virtual event to explore the latest innovations needed to get ahead of the rapidly evolving threat landscape. Register today to hear more about our latest innovations coming to Darktrace’s offerings.

参考文献

[1] The survey was undertaken by AimPoint Group & Dynata on behalf Darktrace between December 2023 & January 2024. The research polled 1773 security professionals in positions across the security team from junior roles to CISOs, across 14 countries – Australia, Brazil, France, Germany, Italy, Japan, Mexico, Netherlands, Singapore, Spain, Sweden, UAE, UK, and USA.

[2] Based on the average change in email attacks between January and February 2023 detected across Darktrace/Email deployments with control of outliers.

[3] Average calculated across Darktrace customers from 31st August to 21st December.

[4] Average calculated across Darktrace customers from 31st August to 21st December. Novel social engineering attacks use linguistic techniques that are different to techniques used in the past, as measured by a combination of semantics, phrasing, text volume, punctuation, and sentence length.